This is a special issue of Chance News. It includes only one item which is a story 'The Chance of a Prime'. The more traditional Chance News will be sent out soon.

In 1998 the Mathematical Sciences Research

Institute in Berkeley, California had a three-day conference on Mathematics

and the Media. The purpose of this conference was to bring together science

writers and mathematicians to discuss ways to better inform the public about

mathematics and new discoveries in mathematics. As part of the conference,

they asked Peter Sarnak, from Princeton University, to talk about new results

in mathematics that he felt the science writers might like to write about.

He chose as his topic "The Riemann Hypothesis." This is generally

considered the most famous unsolved problem in mathematics and is the major

focus of Sarnak's research.

In his talk, Sarnak described some fascinating new connections between the Riemann Hypothesis, physics and random matrices. He used only mathematics that one would meet in calculus and linear algebra. Sarnak's lecture, and a discussion of his talk by the science writers, can be found here under "Mathematics for the Media". (Readers might also enjoy the lecture by Larry Gonick, author of "The Cartoon Guide to Statistics.")

Unfortunately, the discussion of Sarnak's talk by the science writers revealed that most of them were completely lost. The first science writer commenting on the talk said that she felt the way she had at a party with German friends. Her friends would try to speak English for a while but would lapse into and out of German resulting in her understanding very little of the conversation.

Even though Sarnak's talk was not a great experience for most of the science writers, it was an excellent expository talk given by a great mathematician. Our own enjoyment of this talk suggested to us that readers of Chance News would also enjoy it. However, we felt that it would help to have some written commentary to go with the video. Our colleague Dan Rockmore offered to provide such a commentary for us. Dan's first installment, presented below, is related to to the first half of Sarnak's talk dealing with properties of prime numbers and history of the Riemann Hypothesis. In future issues of Chance News Dan will provide commentaries related to the second half of Sarnak's talk in which he explains the connection between the Riemann Hypothesis and physics through random matrices.

Another interested popular talk on the Riemann Hypothesis was given recently be Jeff Laaler at the University of Texas. The Clay Mathematics Institute has offered million dollar prizes for solutions of seven famous outstanding problems in mathematics. One of these problems is the Riemann Hypothesis. The University of Texas mathematics department had a series of Millennium Lectures for the general public with a lecture on each of the seven problems. Jeff gave the lecture on the Riemann Hypothesis. The original video of this lecture was hard to follow since the slides were difficult to read. The Clay Institute provided us with the original tape and the slides and we have put them on the Chance Lecture series in our format developed for our Chance Lectures by photographer Bob Drake that makes it easy to read the slides. You can find Vaaler's lecture at the Chance Lecture Series under "Other Lectures". You can skip most of the introduction by starting at 6 minutes into the video.

To watch these videos you will need the free RealOne Player. which you can obtain here. Finding the free player might seem like a hide and seek game but keep on the free path and you will succeed. You will also need the real player plug-in which comes with recent versions of Real Player. Finally, you will need a connection to the internet which is at true 56 Kbps or faster.

We encourage you to read Dan's commentary from the web before printing it in order to see the wonderful interactive graphics that our colleague Peter Kostelec provided for us. We suggest reading Dan's commentary first, then watching Jeff Laaler's talk and then Sarnak's talk for the final word. You will then be in a position to fully appreciated the news and the movie when this famous problem is finally solved.

HERE IS EPISODE ONE!

Chance in the Primes

Dan

Rockmore

We hardly ever think of the good old natural numbers as a place for chance

or randomness. Much of mathematics may seem inscrutable, but the numbers ![]() spill out, known and familiar, grains making up a mathematical

salt of the earth. Nevertheless, the understood aspect of the natural numbers

is their additive structure. Getting from one number to another by addition

or subtraction poses no mysteries. It is only when we start to think of things

multiplicatively that the trouble starts and the surprises enter. In a recent

MSRI lecture,

Sarnak discusses the ways in which probability and statistics, chance, are

helping unravel some of the prime mysteries.

spill out, known and familiar, grains making up a mathematical

salt of the earth. Nevertheless, the understood aspect of the natural numbers

is their additive structure. Getting from one number to another by addition

or subtraction poses no mysteries. It is only when we start to think of things

multiplicatively that the trouble starts and the surprises enter. In a recent

MSRI lecture,

Sarnak discusses the ways in which probability and statistics, chance, are

helping unravel some of the prime mysteries.

The prime numbers are the basic multiplicative building blocks and there is

a great deal of work still ongoing to understand the way in which they are

distributed among the natural numbers. As you list them, they seem to fall

by chance: 2,3,5,7,11,13,.... Since the time of Euclid we have known that

there are an infinite number of primes. His proof is easy to restate. Given

any finite collection of primes ![]() then the number

then the number

![]() is not divisible by any of

is not divisible by any of

![]() , so must be divisible by primes not among this set. So,

given any finite set of primes, this produces a different prime, so no finite

set of primes can be complete, so there must be an infinite number.

, so must be divisible by primes not among this set. So,

given any finite set of primes, this produces a different prime, so no finite

set of primes can be complete, so there must be an infinite number.

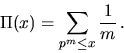

Knowing that there are an infinite number is just the beginning; the next step is to quantify this. More precisely, we define a function

![]() the number of primes less than or equal to

the number of primes less than or equal to ![]() ,

,

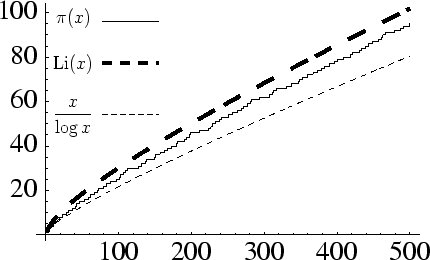

and we study the asymptotics of this function. Based on extensive calculation, Gauss first conjectured and ultimately de Vallee Poisson and Hadamard (1896) proved the "Prime Number Theorem"

![]()

that is,

In fact, Gauss had conjectured (and it implies the Prime Number Theorem)

a more precise estimate, that

where

As an aside, the comparison of ![]() and

and ![]() is the source of one of the largest numbers ever written in a

math paper. It is known that

is the source of one of the largest numbers ever written in a

math paper. It is known that ![]() is not always greater than

is not always greater than ![]() , but the first place where it will happen may be around

, but the first place where it will happen may be around ![]() .

.

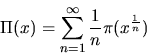

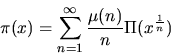

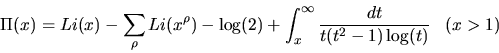

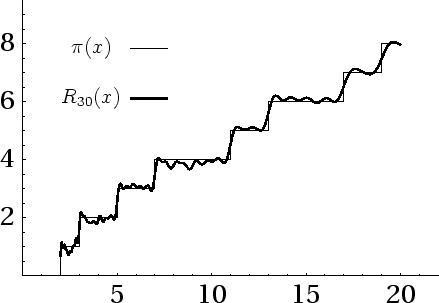

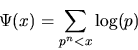

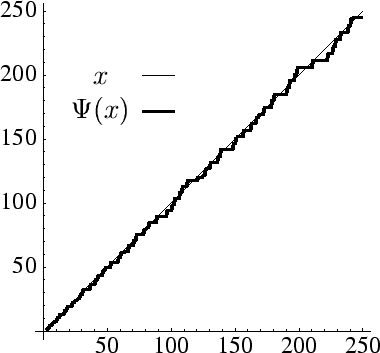

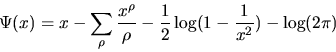

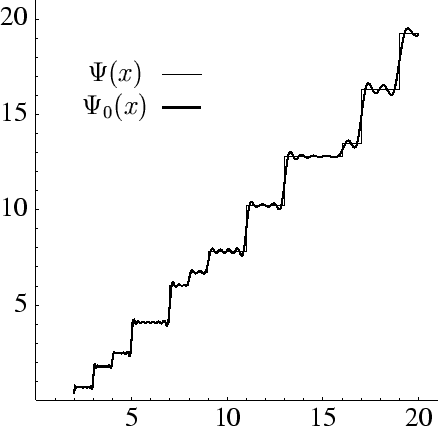

Now, asymptotics are ok, but what is of interest is in getting the estimate

as right as can be. That is, we'd like to be able to write that

where

For

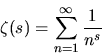

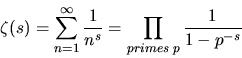

One obvious connection to the primes is through Euler's product formula

The formula results from expanding each of the factors on the right

and noting that their product is a sum of terms of the form

where

Riemann's great contribution was in linking the zeros of the zeta function

to the asymptotics of ![]() . He first was able to show that even though

. He first was able to show that even though ![]() diverges to infinity, the zeta function did have a consistent

definition at all other points in the complex plane. In other words, while

it is easy to see that

diverges to infinity, the zeta function did have a consistent

definition at all other points in the complex plane. In other words, while

it is easy to see that ![]() makes sense for any

makes sense for any ![]() with real part greater than

with real part greater than ![]() , he was able to find an analytic continuation of

, he was able to find an analytic continuation of ![]() to the entire complex plane, outside of a singularity at

to the entire complex plane, outside of a singularity at ![]() . Part of this work was the discovery of the "functional equation"

which relates the values of

. Part of this work was the discovery of the "functional equation"

which relates the values of ![]() to the those of

to the those of ![]() - like some funny sort of mirror symmetry about the line

- like some funny sort of mirror symmetry about the line

![]() .

.

The functional equation immediately shows that there are some easy to find

zeros: the numbers -2,-4,-6,.... These are called the trivial zeros. The functional

equation also easily shows that any other zeros have to have real part between

0 and 1, the so-called "critical strip"! It is these nontrivial zeros

that are all the rage. Riemann conjectured (get ready - this is the infamous

hypothesis!) that those nontrivial zeros must split the critical strip :

This has been checked into the millions - that is, that all the zeros with imaginary part into the millions and in the critical strip are actually on the line

In order to relate